Introduction

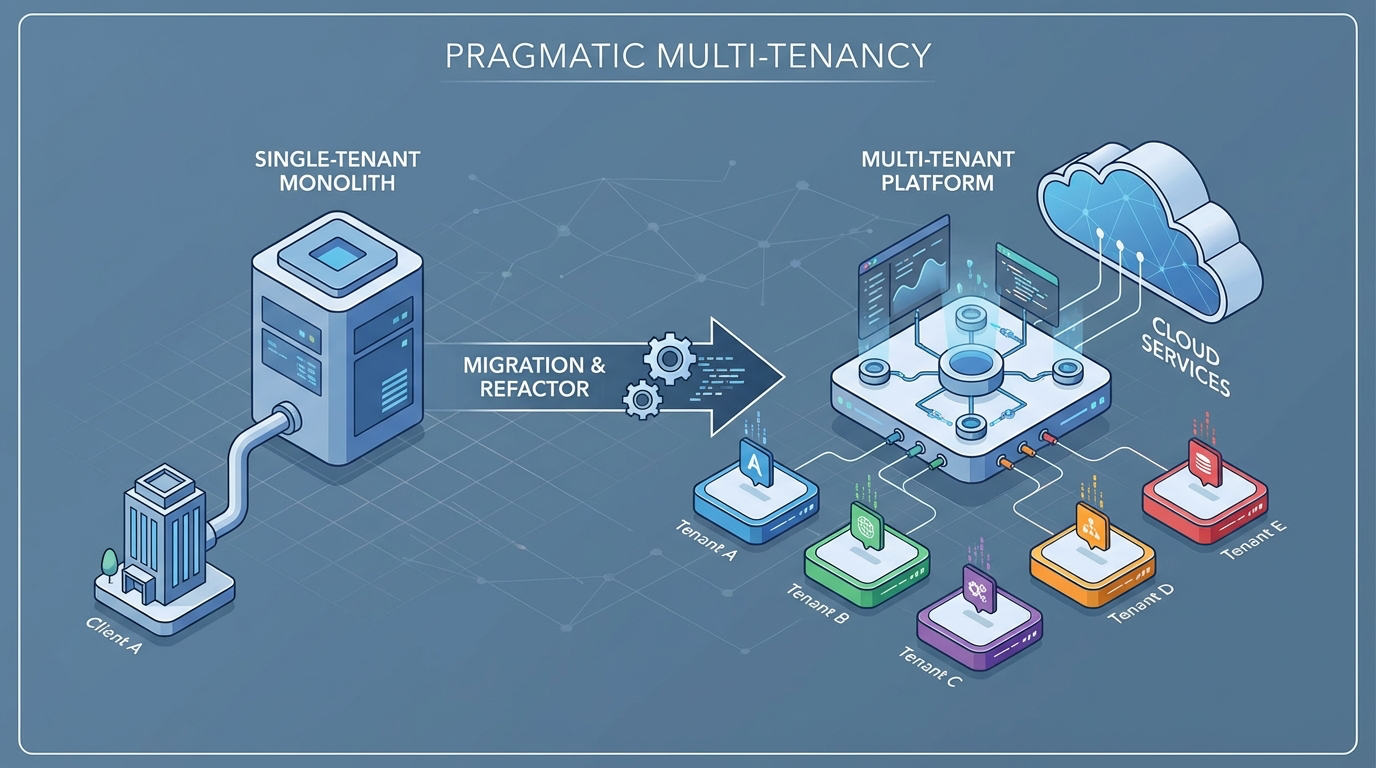

Scaling a software product often involves a critical decision point: do I deploy a new instance for every new client, or do I re-architect the existing service to handle everyone at once?

I recently navigated this transition with a backend chat service. The goal was to move from a single-tenant model to a multi-tenant architecture. The challenge wasn’t just code; it was doing it efficiently—without bloating the infrastructure budget with new tools like Redis or complicating the stack with heavy authentication servers.

Here is how I designed a pragmatic, secure, and cost-effective solution.

1. Rethinking Authentication for Client-Side Apps

In a traditional multi-tenant B2B setup, you might reach for complex OAuth flows immediately. However, the requirements were distinct: there were multiple frontend applications that needed to connect to the backend, and I needed a lightweight way to secure them without friction.

Instead of a heavy auth layer, I implemented a Public Key + Dynamic CORS strategy.

- The Public Key: Each tenant is assigned a unique public identifier (

X-Tenant-ID). This isn’t a secret; it’s an ID embedded in their frontend code. - The Guard: Security is enforced via network policies, not just secrets. I utilize strict, Dynamic CORS (Cross-Origin Resource Sharing).

When a request hits our server, the middleware checks the Origin header against a whitelist stored in that tenant’s configuration. If the request comes from an unauthorized domain—even with a valid Tenant ID—it is rejected immediately. This provides a robust security layer for browser-based clients without the overhead of token management.

2. The Migration: From Environment to Database

The most significant shift in moving from single to multi-tenant is how you handle configuration.

In the single-tenant version, everything lived in process.env. The database URL, the cloud provider secrets, the API keys—they were all static. To support multiple tenants, I had to make the application context-aware.

I migrated these configurations into a MongoDB collection. Now, instead of the application booting up with one identity, it boots up “neutral.”

3. The Data Blueprint

To visualize this transformation, here is a simplified pseudocode example of the tenant model I designed. This object acts as the “source of truth” for everything the system needs to know about a specific client.

// A simplified view of our Tenant Model

interface ITenantConfig {

// Public Identifier: Exposed to frontend clients

apiKey: string;

// Security: The "Firewall" rules (Dynamic CORS)

allowedOrigins: string[]; // e.g., ["https://client-a.com", "https://app.client-a.com"]

// Context: Infrastructure credentials (stored securely)

providerConfig: {

connectionString: string;

resources: {

endpoint: string; // e.g., "https://tenant-resource.communication.azure.com"

region: string; // e.g., "us-east-1"

};

};

// Operational Settings

isActive: boolean;

maxConcurrentConnections: number;

}This structure allows efficient querying by apiKey and immediately provides all the security and infrastructure context required to process the request.

4. Dynamic Configuration & Request Scoping

This leads to the core architectural pattern: Request-Scoped Context.

I moved away from global singletons. In the old code, a database client might have been initialized once at startup. In the new architecture, I leverage middleware to “hydrate” the request.

- Intercept: The request arrives with a Tenant ID.

- Load: The middleware fetches the specific configuration (connection strings, secrets, policies) for that tenant.

- Inject: This configuration is attached to the request object.

This allows the service to morph its behavior for every single HTTP request. One request might route messages to an Azure resource in the US, while the very next request routes to a different resource in Europe, completely transparently to the core logic.

Multi-Tenant Request Flow

Here’s a visual representation of how requests are processed in our multi-tenant architecture:

graph TD

A[Client Request] --> B{Auth Middleware}

B -->|Extract X-Tenant-ID| C[Fetch Tenant Config]

C --> D[MongoDB: Tenant Collection]

D -->|Config| E[CORS Validation]

E -->|Origin Check| F{Valid Origin?}

F -->|No| G[403 Forbidden]

F -->|Yes| H[Inject Config to Request]

H --> I[Initialize Provider Client]

I --> J{Which Provider?}

J -->|Azure US| K[Azure East Resource]

J -->|Azure EU| L[Azure Europe Resource]

K --> M[Process Business Logic]

L --> M

M --> N[Return Response]

style A fill:#f9a825

style D fill:#42a5f5

style F fill:#ef5350

style M fill:#66bb6aEach request bootstraps its own isolated context based on tenant configuration

5. Keeping it Lean: Why I Skipped Redis

A common knee-jerk reaction when building high-performance architectures is “we need a cache layer!” or “let’s add Redis for sessions!”

I made a conscious decision to avoid adding new infrastructure components like Redis at this stage. Why?

- Complexity Cost: Every new service increases maintenance overhead and deployment complexity.

- Performance Sufficiency: The primary database (MongoDB) is already high-performance. For reading simple tenant configurations, the latency overhead is negligible compared to the network round-trip.

- Cost Efficiency: By utilizing existing resources, I kept the cloud bill flat while increasing capability.

I can always introduce a caching layer later if read-heavy traffic demands it, but I don’t start with optimization I don’t need.

6. Architecture Ready for Scale

Finally, this refactoring effectively decoupled our business logic from the infrastructure providers.

By abstracting the tenant configuration, the core application doesn’t “know” it’s talking to a specific cloud resource. It simply asks for a “Communication Client” and the factory pattern provides one based on the current context.

This design gives immense flexibility. If I need to switch one tenant to a different provider, or migrate a high-volume tenant to a dedicated database cluster, I can do it just by changing a configuration document—no code deployment required.

Conclusion

Migrating to multi-tenancy doesn’t always require a rewrite of your entire stack or a massive budget increase. By focusing on smart middleware, dynamic configuration, and leveraging existing tools, I built a system that is secure, flexible, and ready for growth.

Tags